Having begun my career focused intently on the music business I find it interesting how I gravitated to video games. From singer in a band to recording engineer and producer and now Director of a multi faceted game audio development studio, I look back and see how every step of my career was guided intentionally or not by the relentless march forward of technology.

Let’s face it, we’re all obsessed with our windows into tech. As I walk around South of Market in San Francisco I see more heads down than up! With games on every mobile device, games have become as ubiquitous and as much a defining part of our culture as music and film. This is only the beginning of what promises to be an ever more immersive experience with Oculus, Hololens, and Morpheus all racing to fulfill the promise of completely immersive virtual reality!

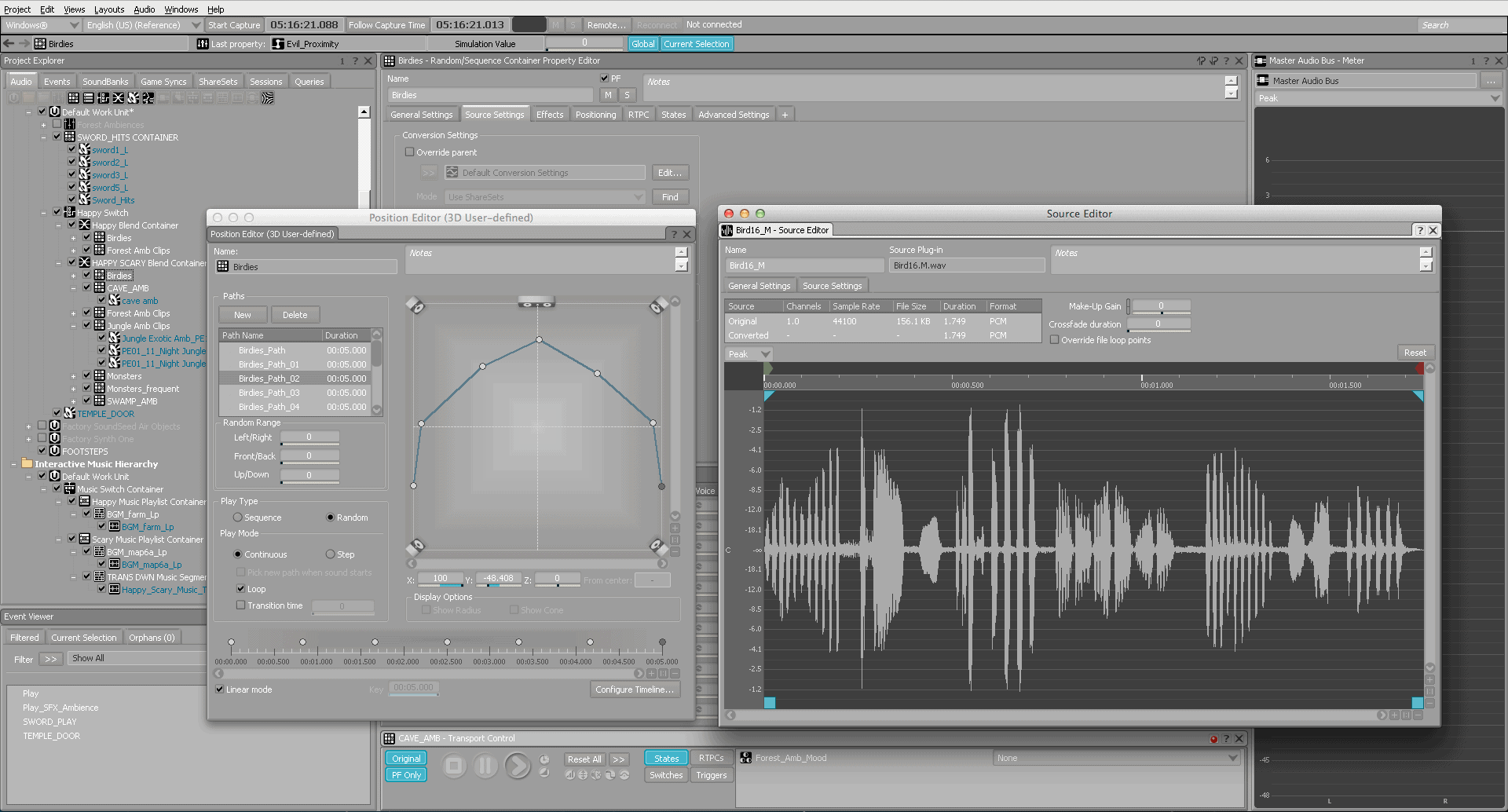

The same has been true for music and sound in games and for me this is where it gets so exciting. As game delivery platforms and the engines that drive them have become more and more powerful so too have the audio middleware tools that allow audio designers to set up complex audio behaviors that interface directly with the game engine. It seems like long ago that a programmer had to add lines of code in the game engine to manually trigger sounds in the audio engine for everything that needed a response. Such sound calls might seem as simple as, “Hey, the tiger roared”, but in reality calls like these were often sent in a string of cryptic code. Of course nowadays, the game programmer can pass much simpler calls, including “The tiger roared”, to the audio middleware and any number of things can happen as defined by you, the audio designer, including a random selection of several different roars all with random pitch shifts, and a 3 in 10 chance of a distant flock of birds heard scrambling to the sky in response.

There was a time in games when content creators were at the mercy of the programmers and a tight deadline and an unknowing coder’s hand could butcher our vision of the perfect adaptive score. But now in age of powerful middleware tools like Wwise, FMOD, Fabric, Master Audio and others, the content creators, meaning great composers, imaginative sound designers and the mixers and engineers who help bring the recorded music to life can all participate in the process of making their work respond and sound the way they intend it to.

Today’s audio middleware tools have changed how audio geeks like myself and my team can collaborate directly with developers by contributing to the creation of a great interactive experience. As we push the boundaries of adaptive sound we know it’s not enough to just create great sound and music assets – it’s the quality of the end user’s audio experience that ultimately matters. These tools empower us to create interactive sonic experiences in surround sound that completely immerse the player in innovative and exciting ways. This is an exciting place to be and from my perspective the experience is only getting brighter!